This post is a detailed analysis about the steps we’ve taken to improve our EmojiNews chatbot. Please let us know in the comments if you have any feedback!

Emojis as an emotional layer

Emojis are becoming prevalent in today’s communication. You know something is far beyond being “a thing” when even your grandma’s using it (mostly this one though: 😎). What we love most about emojis is that they have their own code, sort of an emotional language that people use to show how they feel about certain things. Just with the tap of a button on their smartphones.

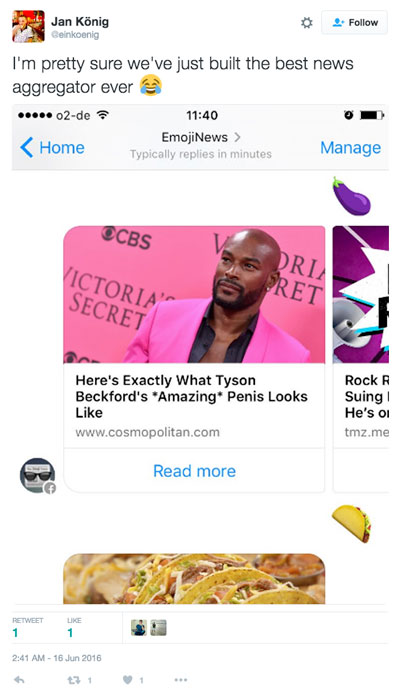

Even better, we noticed that people use a lot of emojis when they share stuff on Twitter and Facebook. By adding a laughing emoji it’s possible to tell your friends how you feel about this article you just read. If you combine this for all of the things that are shared across the web, it’s like an emotional layer for news.

EmojiNews: Why we built it

Wouldn’t it be amazing to be able to access this layer and filter news by emojis people use when they share links? We thought so! And since we’ve also been thinking about the various input types for Facebook Messenger and wanted to experiment with other commands than just text, this was a great project for us to play with. During a hackathon in June, we came up with the idea and built the first prototype of the EmojiNews chatbot. You can try it here: EmojiNews on Facebook Messenger

3 iterations later, after lots of tweaks, we can look back at many decisions that improved the UX of our chatbot (that’s at least what we think!) In this post, we’ll share our learnings along the way.

Disclaimer: This messenger bot doesn’t have anything to do with the one by Fusion (read something about it over at DigiDay). We came up with the idea during a hackathon and didn’t worry much about the name. While our chatbot screens the social web for news articles that are shared with emojis, Fusion’s chatbot tells the news by using emojis instead of text. Sorry for causing any confusion (lol).

First prototype: Let’s see how people react

With the first release of the EmojiNews Bot, we wanted to start with very simple functionality.

With the first release of the EmojiNews Bot, we wanted to start with very simple functionality.

We used a bunch of RSS feeds, put them into the Twitter API and saved the references to emojis that appeared in tweets with the article links. Also, we used the Facebook API to see which emojis certain pages use when they share links to their stories.

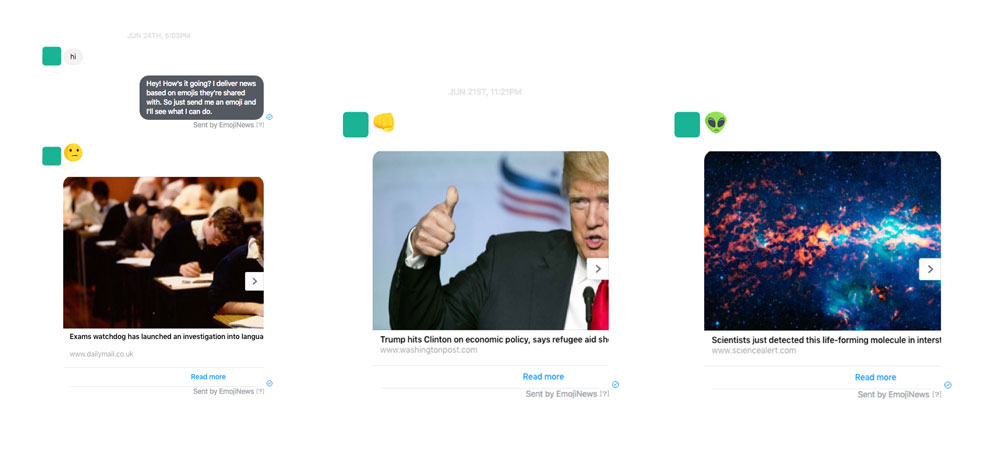

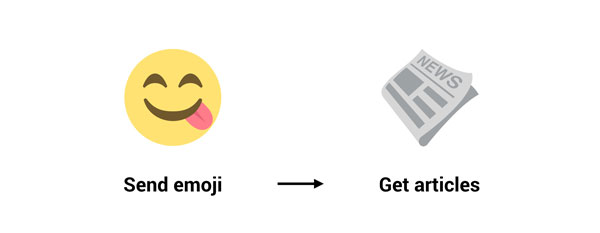

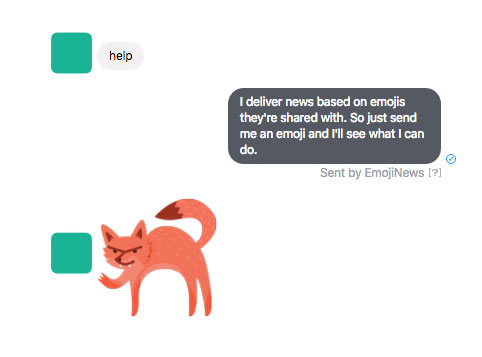

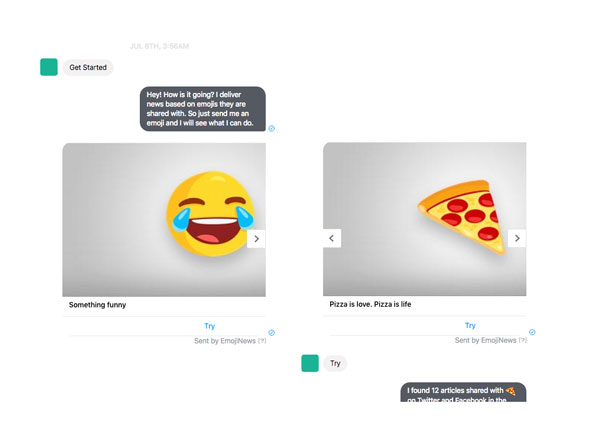

Here’s what the basic functionality looked like:

We made clear it only worked with emojis, nothing else.

We, ourselves, loved to play with it. It came with an unexpectedness: What happens if I use the eggplant emoji🍆 ? Will there be Trump articles shared with the poop emoji💩 ? What about the major key🔑 ?

UX learnings from this iteration

- Implement unpredictability in chatbot experience so people play around and stay curious

- Provide very narrow functionality at first and see how people use it (single purpose bots)

Second iteration: Help people understand how it works

We published the bot and let it rest for a little while (to work on our main product HashtagNow). However, people seem to search for “emoji” in their Messenger app, so some users started coming in.

The great (and maybe even a little creepy) thing about Facebook Messenger is that you can watch people interact with your chatbot. This really helped us figure out what worked (and what didn’t). With our initial users, we saw mainly two problems:

- People didn’t understand the functionality

- People didn’t play around a lot

For the second iteration, we made a few very simple changes to solve these problems. Here’s what we tried to do:

Make functionality clearer

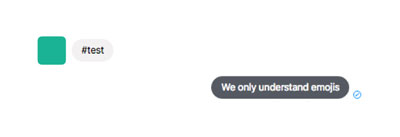

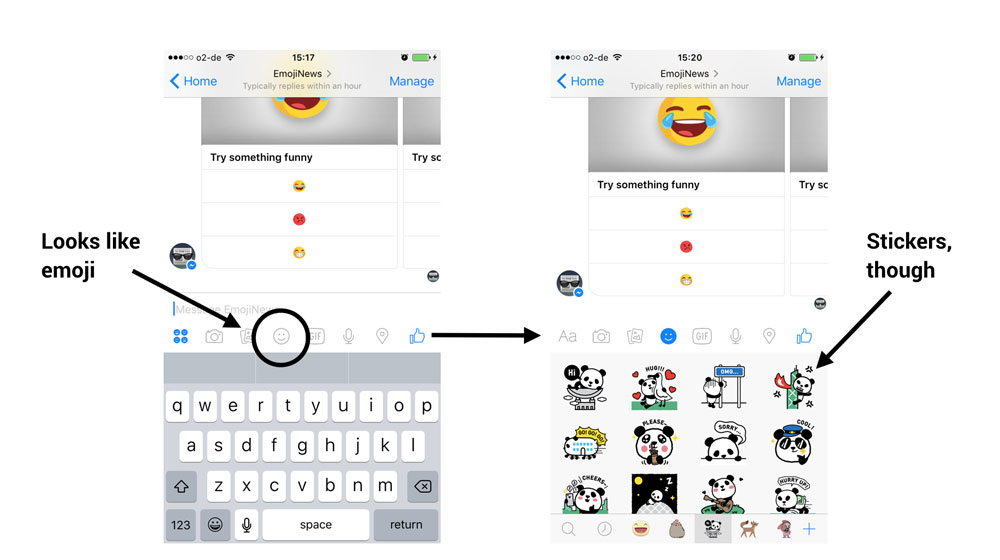

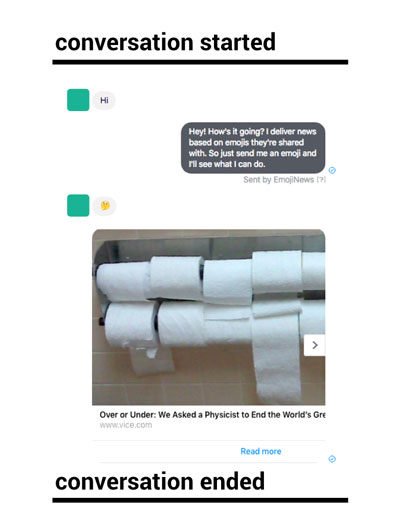

This is what many conversations looked like at the beginning. Stickers don’t work with EmojiNews, as they aren’t used to share links to social media. The main problem with sticker is, that Facebook uses different types of icons to access them. This causes confusion, so people ended up using them when they actually wanted go with emojis.

To help people understand which input types can be used, we provided some example emojis in a gallery element. This way, we hoped they understood which types of input could be used. The “Try” button should make it as easy as possible to activate users.

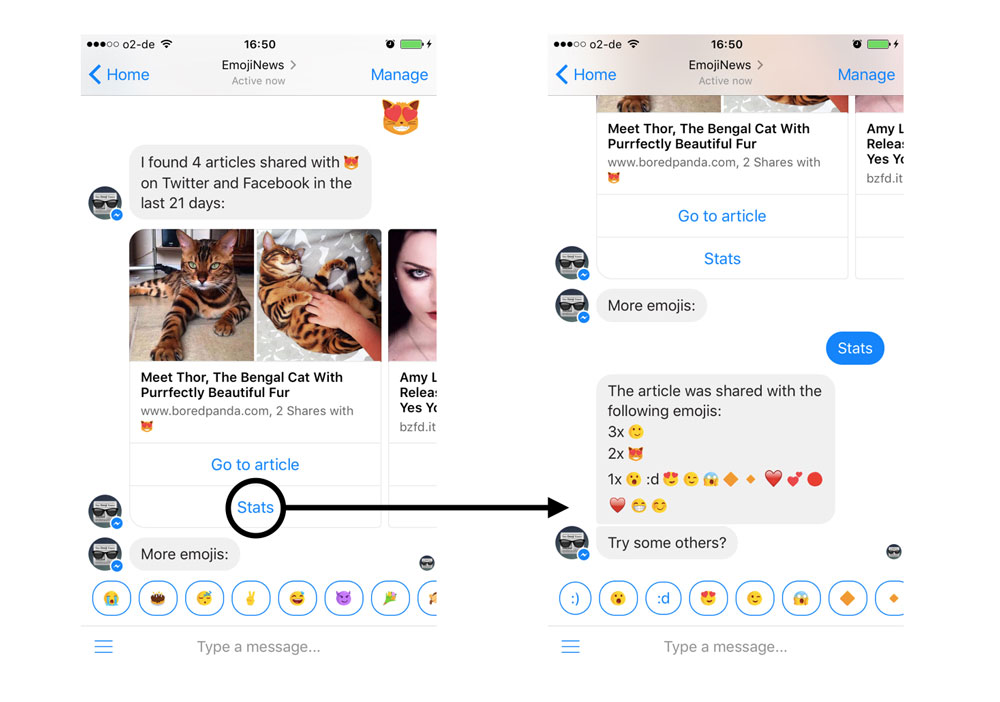

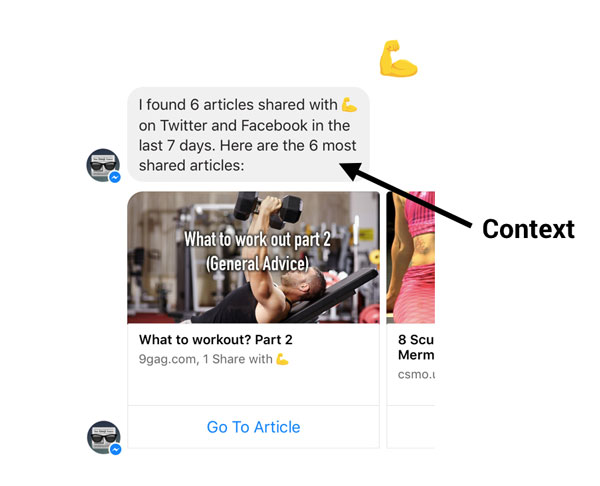

Another problem was the lack of context provided with the article recommendations. It was just input-output. People didn’t know where the articles came from, and thus, didn’t really play around as they thought it’s just a curated collection where the categories are replaced by emojis. To change that, we implemented an preceding message that comes with every emoji to provide some context. Here’s what it looked like:

Increase dwell time

What bugged us most: people didn’t even come far enough to experience the playful unexpectedness that comes with the bot (e.g. when you try emojis like 💩🍆🍕👄). Conversations ended way too soon. They usually looked like this:

Of course we want people to stay and to play around as much as possible. Here’s how we tried to increase the time spent with EmojiNews:

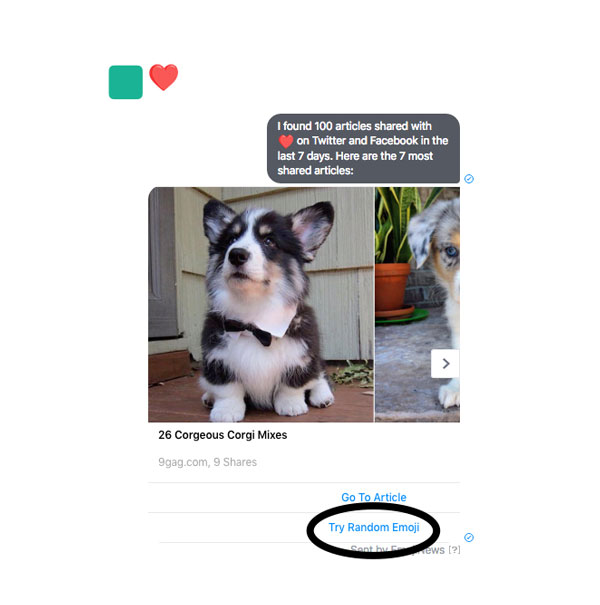

Sometimes, people don’t want to think or do work at all. For the first experience of a bot, if I have to open up my emoji keyboard, and think of an appropriate one to use, I might get annoyed. We set up random emoji buttons to keep people engaged by just tapping on the screen a single time. Also, it provides some kind of randomness. (Note that quick replies weren’t possible at this time)

UX learnings from this iteration

- Provide context

- Provide options to choose from, help them think

- Always provide an option to keep users engaged, never leave them hanging

Third iteration: Quick replies are magic

After we saw a few people coming back very often, always trying the newest emojis, we decided to enhance the current features. Especially the introduction of quick replies and the persistent menu was something we found very useful.

Here’s what we changed:

- Added quick replies to increase dwell time

- Added a “Stats” section

- Added more features to explain functionality

- Added a persistent menu

- Warned people for longer processing times

- Increased variability for text

- Smoke Test: Subscribe to Emoji

Quick Replies

The buttons at the end of messages always felt a bit clunky and limiting, as there are only up to three possible, and they are strictly tied to single elements. With the quick replies, Facebook introduced a new feature that changed that. As they wrote in their announcement, quick replies “offer a more guided experience for people as they interact with your bot, which helps set expectations on what the bot can do.” That’s perfect for our use case of showing example emojis to help people come up with ideas!

The “random emoji” button from the previous section was used by many people, but didn’t really invite to play around. With the quick replies, users still have the option to choose, while being offered some examples they could just tap on.

Stats

Although we gave it our best in previous iterations, people still didn’t play around as much as we liked them to. This is why we introduced a new Stats feature that shows all the other emojis used with this article (like related categories/tags), and thus, an opportunity to explore similar content. We’re very interested if and how people will use this feature.

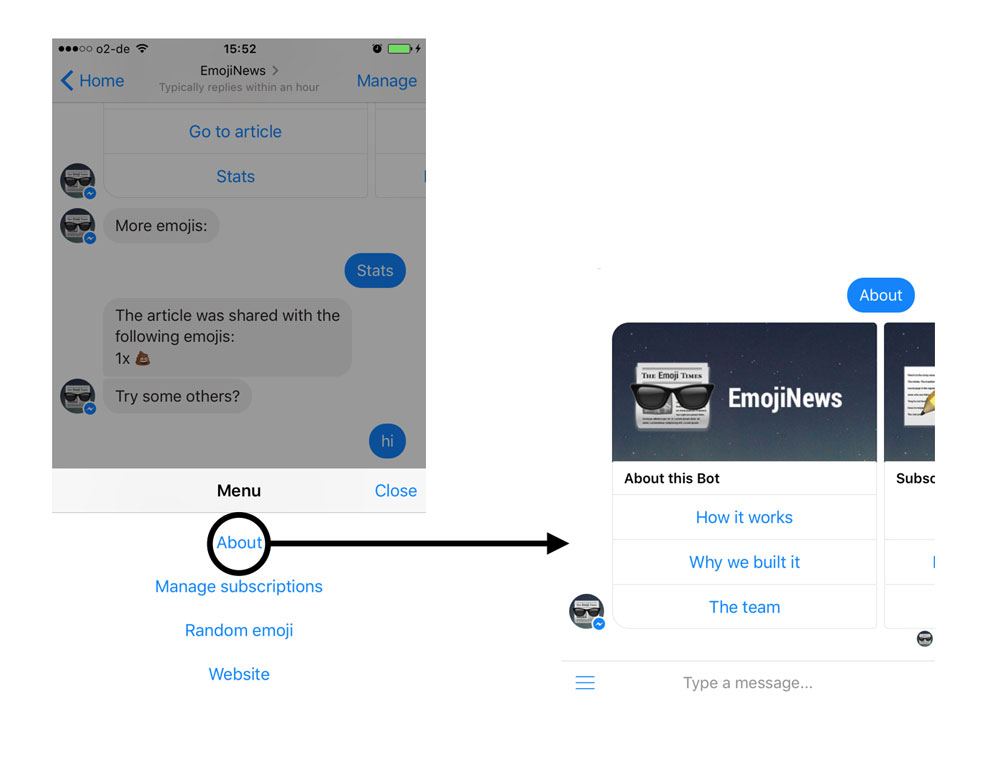

Persistent Menu & About

The persistent menu is a must-have feature: One tap away, users can access the bot’s most important features. We’ve already seen people using the “Random emoji” command a lot. Also, we added a gallery element to explain the functionality (for more context):

Processing Times

One of the more annoying things: We’re dependent on the speed of third-party content, which sometimes causes longer loading times. In previous iterations, it sometimes happened that people clicked on the same button twice because they thought something went wrong.

In this iteration, we implemented a preceding message to provide feedback that processing times might be a bit longer than expected:

Also, we used about 10 different texts for this feature to make it feel less generic. Something like “Give me a second..”, “Just a little bit…”, and “Tracking down articles for you…”

Smoke Test: Subscribe to Emoji

We saw some people use the same emojis over and over. So we thought: Why not offer the ability to subscribe to certain emojis and stay in the know if something really 😂 or 💩 happens?

This is a smoke test: Nothing happens right now, but as soon as we see people subscribing, we’ll set up a cronjob to deliver articles that are trending with certain emojis.

Also, we love the built-in animations by Messenger and we used them to give positive feedback about the subscription. See how it looks like when you send balloons:

Lovely, right?

UX learnings from this iteration

- Use quick replies to provide sample inputs (again, help users think)

- Use variable text patterns to make the conversation feel less generic

- Step right into the main feature, but provide the option to dig deeper to see how it works (about-menu)

- Give feedback about user input (even when loading times are a bit longer)

Fourth iteration: Early feedback

We just launched the newest version and we’ll hopefully learn a lot about user interactions with it. Here’s a few things that already came up, though:

- Generic text

- Amount of articles

- Subscription feature

Generic text

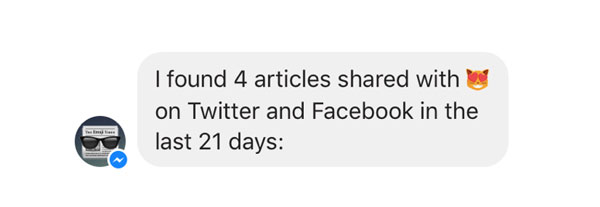

The preceding messages that provide context currently look like this:

That’s very generic and not delightful to use. We’re currently working on variable text patterns to keep the conversation less generic and more fun. This way, we can also add more unexpectedness to the experience.

Amount of articles

We screen more than 100 RSS feeds and Facebook pages and did millions of Twitter API requests for more than 180.000 articles so far. However, the amount of articles shared with emojis isn’t high enough (it’s about 8% of tweets with articles links that contain emojis), so we need to make more calls to keep the database updated. Especially with the introduction of the subscription feature, this is an important point.

Emoji Subscription

This will be fun! We’ll share our learnings about this feature at a later point.

Thanks!

Thanks for reading this far! We know it’s a very long article, but we hope it provided some insights into chatbot UX. If you liked it, maybe you want to share it?

Header photo by Markus Spiske, emojis in the subheader images by Emoji One!